Table of Contents

- Overview

- Comparison with gpt-image-1, gpt-image-1-mini models

- Side by Side – “A person calms a rearing horse”

- Quality Settings Impact Results

- Practical Implications

- What’s Next?

OpenAI Image Gen 1.5 Hits the Juice December 2025

A Comprehensive Quality Analysis between gpt-image-1.5 (new) vs gpt-image-1, gpt-image-1-mini (existing)

After my recent look at gpt-image-1-mini I’m excited to bring you the results for the brand spanking gpt-image-1.5. And wow what a difference. gpt-image-1.5 is flexing, gigachad and sigma. Put alongside previous models, 1.5 can snap you like twig.

Comparison with gpt-image-1, gpt-image-1-mini models

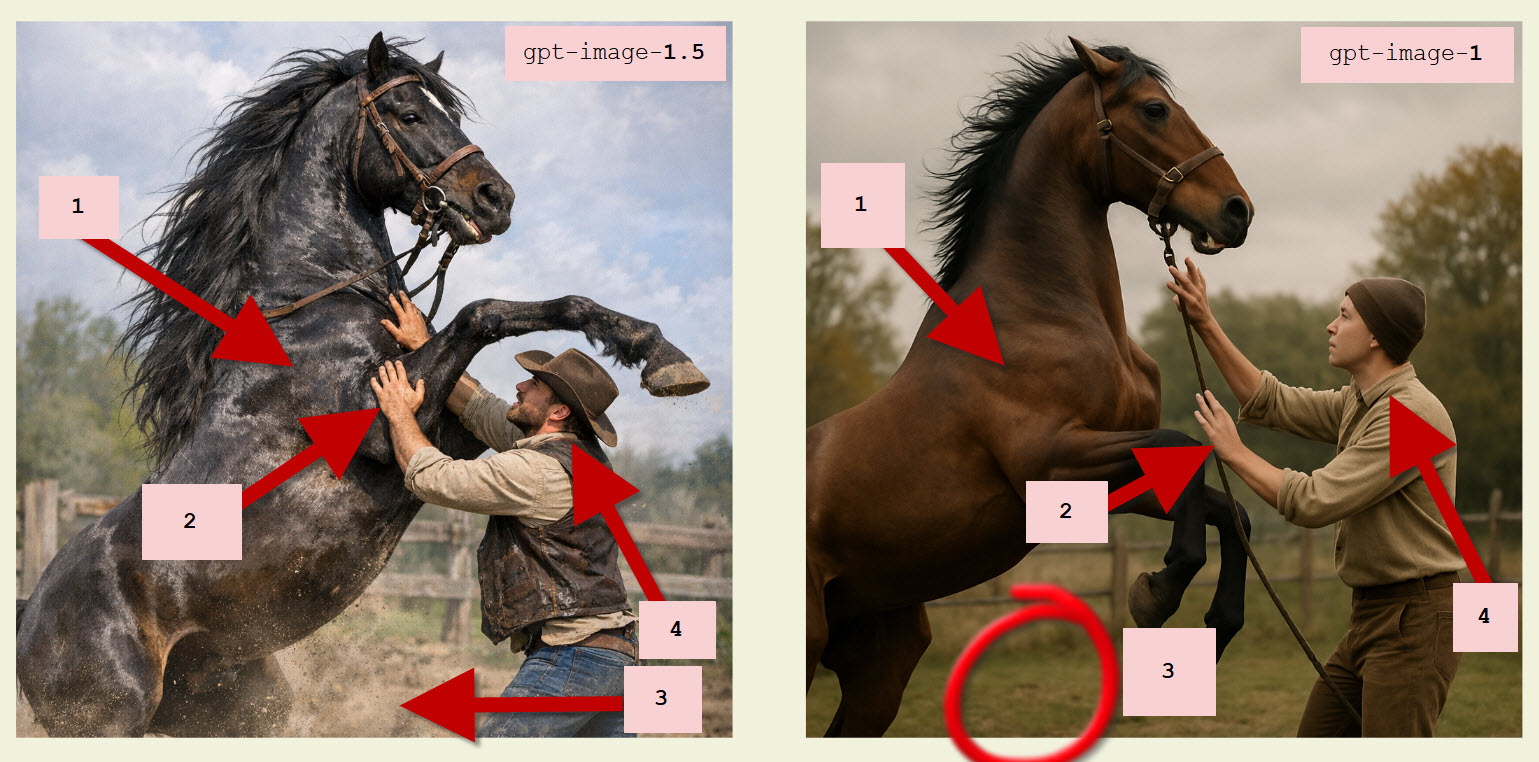

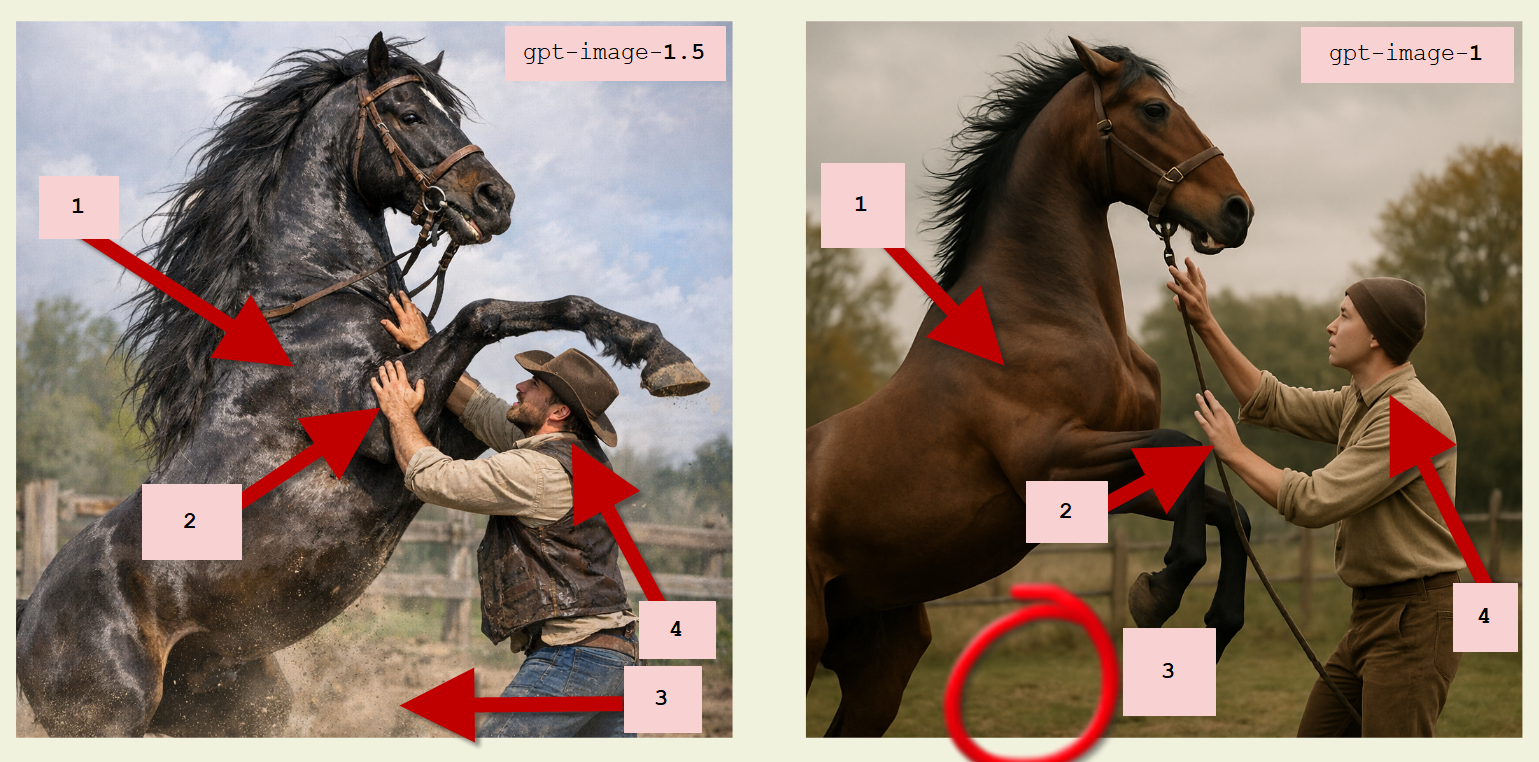

The 1.5 model has hit the juice and is out to steal your gf. Everything is musclier, more kinetic, more dynamic. Often at the expense of realism. The horses are now paleo-Clydesdales horses of The Gods. The person is now a hirsute gnarly wrangler who was too tough for Yellowstone. Dust swirls everywhere as the two do their dance. Light comes in to play with realism enhancing lit and shaded portions adding depth.

- 1. Sheen and Muscliness. 1.5 is muscly and ripped. And the horse’s coat shines with a lustrous sheen as you admire the sunshine. In contrast gpt-image-1 is matter of fact, the photo taken on a hum drum dull day.

- 2. Wrangler’s arms. 1.5 looks like he’s wrangled before, maybe from birth. gpt-image-1 looks like a white collar worker was thrust into a paddock after they lost a bet and nervously complied.

- 3. Dust spray. 1.5 captures the drama by spraying realistic dust everywhere. gpt-image-1 remains “mundane office team building away day” style

- 4. Wrangler head. 1.5 the gigachad. gpt-image-1 has a vague air of concern from someone ill suited to the situation.

Findings: gpt-image-1.5 “A person calms a rearing horse”

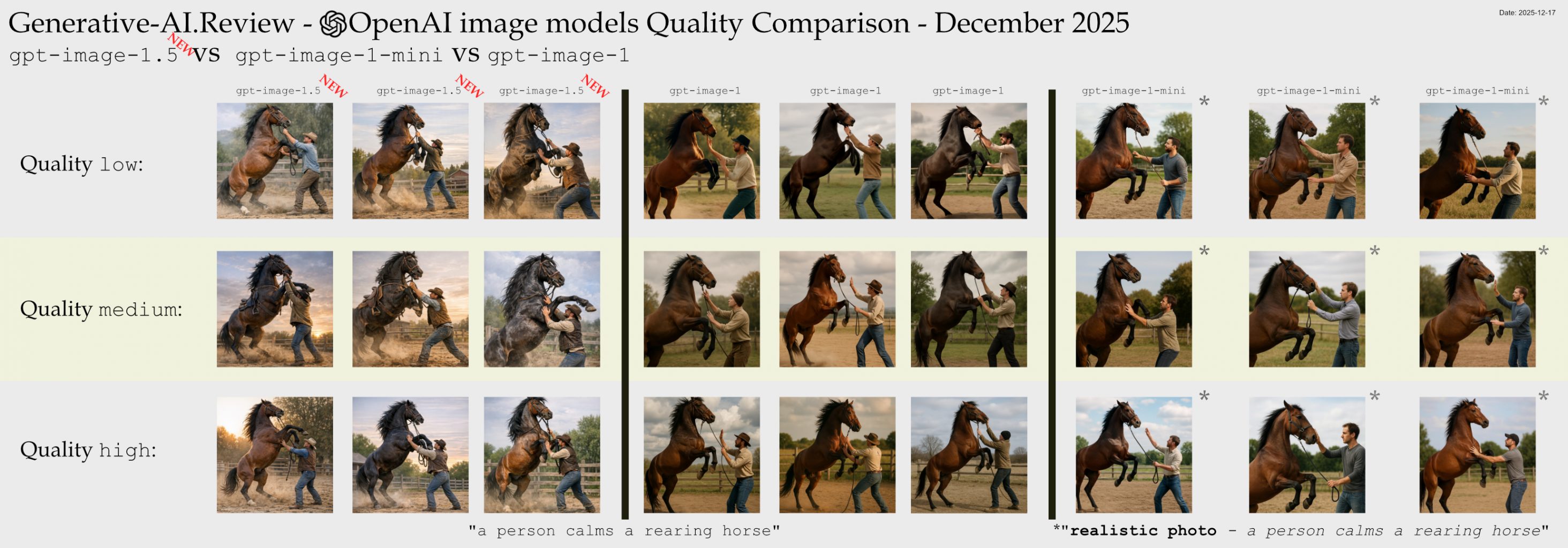

Click for an enormous zoomable version: large 7720x2700px raw link

Technical Note

The middle column was made by gpt-image-1-mini with exactly the same prompt as last time "A person calms a rearing horse". Something has changed in the default style of the mini model such that the output was a drawing. Previous the gpt-image-1 default output style had been photographic realism. So to aid the task of comparison, I also generated the rest with a slightly modified prompt "realistic photo - a person calms a rearing horse". This is a mild change which should preserve most of the original characteristics for side-by-side comparison between models. The generations with the modified prompt are marked with an asterisk (*)

gpt-image-1.5 Low Glitches

Compared to the other models there are few glitches

- The reigns go behind the neck incorrectly

- The reigns cord at the bottom fades away

gpt-image-1-mini Medium Glitches

There were no major glitches that I spotted in Medium.

gpt-image-1-mini High Glitches

There were no major glitches that I spotted in High.

Quality Settings Results

The low is now really very good. Higher values do get better light, better hair, better lighting, better drama. But check for yourself, they’re all really great.

Practical Implications

For practical applications, these findings suggest:

- The image has more kinetic energy, it has been to the gym and it now benches more than you do.

- All quality settings acceptable: Low seems fine with no obvious defects. You’re going to have to carefully check “medium” and “high” are worth the extra dosh.

- Realism has been sacrificed for Juicy Muscly Drama.

The Testing Approach

The Testing Approach

For this expanded analysis, I selected one (two coming soon)prompts specifically designed to test different aspects of image generation capabilities:

"A person calms a rearing horse"– This tests the model’s ability to handle human figures, dynamic action, and anatomical accuracy of both humans and animals in interaction."A fruit cart tumbles down some stairs. The fruit cart sign reads 'Apple-a-day'"– COMING SOON

Following Edward Tufte’s visualization principles, I’ve arranged the outputs in small multiples to facilitate direct visual comparison. For each prompt, I generated multiple images across different quality settings to evaluate consistency, feature accuracy, and stability.

For previous legacy models dall-e-3 and dall-e-2 see the previous article

What’s Next?

Would you be interested in seeing a regular sample of image generations using precisely the same prompts to monitor generation stability over time? This could provide valuable insights into how the model evolves with updates and fine-tuning.

I’m also considering expanding this analysis to include more specialized prompts targeting specific capabilities like architectural rendering, facial expressions, or complex lighting scenarios.