Table of Contents

- Overview

- “A person calms a rearing horse”

- “A fruit cart tumbles down some stairs. The fruit cart sign reads ‘Apple-a-day'”

- Quality Settings Impact Results

- Practical Implications

- What’s Next?

Comparing OpenAI Image Generation 4o: A Comprehensive Quality Analysis

After my initial proof-of-concept comparison gained traction on Hacker News and AI Twitter, I decided to conduct a more thorough evaluation of quality impact OpenAI’s newly released Image Generation 4o API. This follow-up addresses the valuable feedback from the HN community, who rightfully suggested testing more challenging prompts than my original "a cute dog hugs a cute cat" comparison.

The Testing Approach

For this expanded analysis, I selected two prompts specifically designed to test different aspects of image generation capabilities:

"A person calms a rearing horse"– This tests the model’s ability to handle human figures, dynamic action, and anatomical accuracy of both humans and animals in interaction."A fruit cart tumbles down some stairs. The fruit cart sign reads 'Apple-a-day'"– This challenges the model with complex physics (tumbling objects), multiple elements (fruit, cart, stairs), and text rendering.

Following Edward Tufte’s visualization principles, I’ve arranged the outputs in small multiples to facilitate direct visual comparison. For each prompt, I generated multiple images across different quality settings to evaluate consistency, feature accuracy, and stability.

I’ve also included the legacy models dall-e-3 and dall-e-2 so you can get an idea of the advancement over time

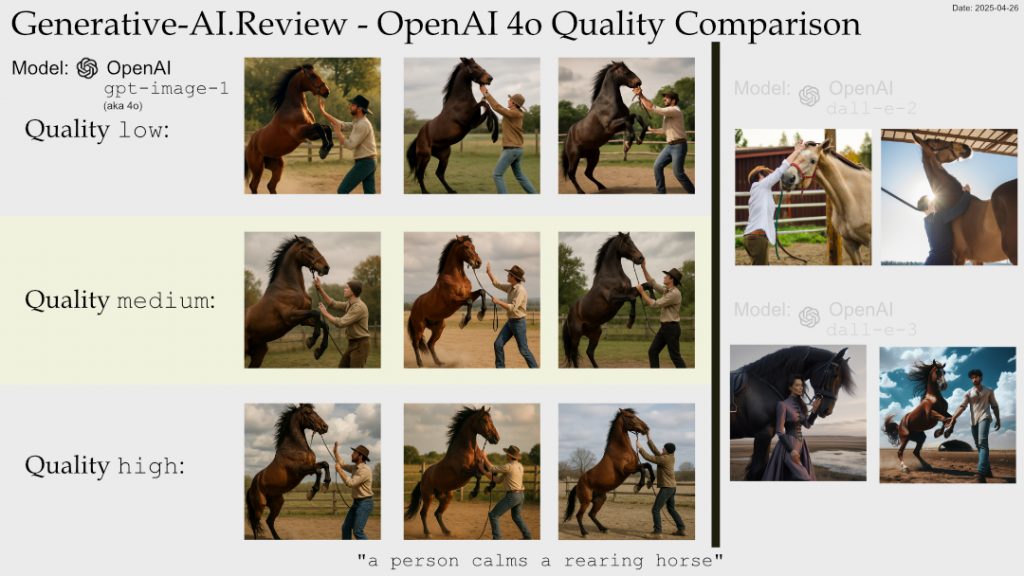

Findings: “A person calms a rearing horse”

Click for an enormous zoomable version: large 4800x2700px raw link

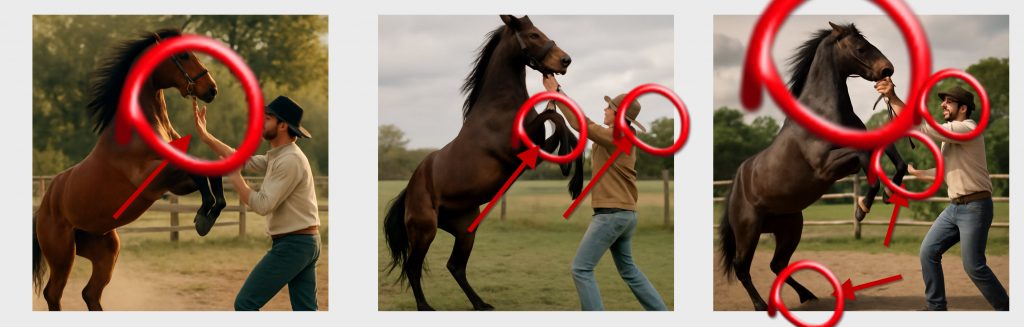

Low Glitches

Just looking at the Low images, the glitches are quite obvious even at first glance

- Left Hand Side

- The horse has no reigns

- The person seems not to be calming the horse, more like doing a Wing Chung move

- Middle

- The Horse’s front legs have sky poking through

- The person’s hat is longer at the rear than the front

- Right Hand Side

- The Horse has no hoof

- The Horse’s head is malformed

- The person has no fingers on one hand

- The person chosen looks unsuited to a Horse Calming situation

Medium Glitches

There were no major glitches that I spotted in Medium. The left hand medium person looks more like a horse thief than a horse calmer. Let me know if your keen eyes spot some and I will update this post with credit.

High Glitches

Looking at the High Glitches there are still some glitches but more subtle

- Middle

- The handlers arm is impossibly long

- Finger count fail. I count 3 fingers.

- Right Hand Side

- The handler looks more like a Horse Thief than someone versed in calming a rearing horse

The “rearing horse” prompt revealed several interesting patterns:

- Anatomical accuracy: At higher quality settings, the model consistently produced accurate horse anatomy, particularly in the challenging pose of rearing. Lower quality settings sometimes produced distorted limbs or proportions.

- Human-choice: The choice of horse wrangler was better

- Clarity: The pictures have better clarity and lighting at medium and high

Comparison with legacy DALL:E models

The dall-e-3 and dall-e-2 images are on the right hand section beyond the thick back separator. Each image is radically different from each other. The instructions are jettisoned, the horse is not always rearing and the people are not always calming the horse. One person on the bottom right is not even looking at the rearing horse, let alone calming it.

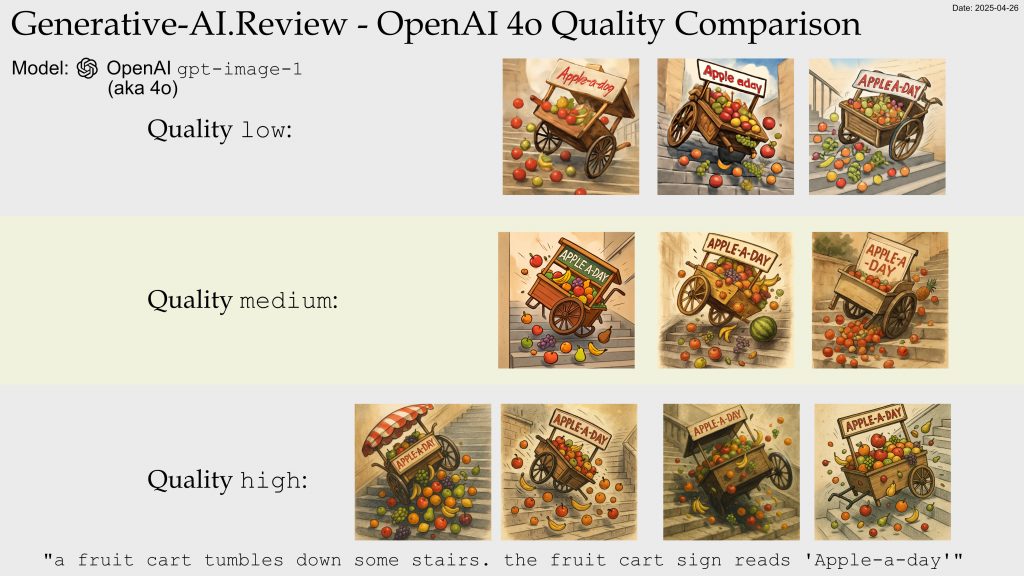

Findings: “A fruit cart tumbles down some stairs. The fruit cart sign reads ‘Apple-a-day'”

Click for an enormous zoomable version: large 4800x2700px raw link

This more complex prompt highlighted several capability edges:

- Text rendering: The “Apple-a-day” text was correctly rendered:

- High: 4/4

- Medium: 2/3

- Low: 0/3 (Apple-a-dog)

- Fruit fidelity:

- High: Good, variety

- Medium: Okay, one had less variety of fruit

- Low: Draft quality

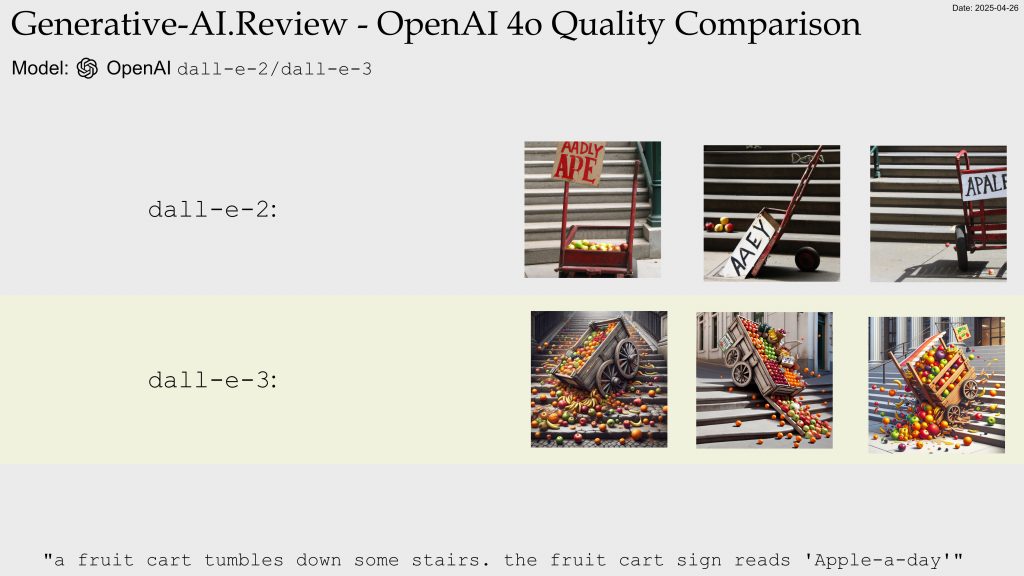

Comparison with legacy DALL:E models

Here are some from the earlier generation image generation models. Again, this is the same prompt.

The main point I want to make is that each generated image is basically independent. The newer models generate more consistent results between calls, and also between quality levels. So it is possible to generate at draft (‘low’) quality and then have a bit of a guide what ‘medium’ and ‘high’ will generate.

Click for an enormous zoomable version: large 4800x2700px raw link

Quality Settings Impact Results

The API’s quality settings demonstrated a clear progression in output fidelity, with notable differences in:

- Anatomical accuracy

- Text legibility

- Compositional coherence

- Scene Lighting

- Feature Detail

Higher quality settings predictably produced better results, but also revealed interesting threshold effects where certain capabilities (like accurate text rendering) became significantly more reliable at high quality level.

Practical Implications

For practical applications, these findings suggest:

"low"gives a good hint at the approach higher quality settings will offer. It’s no longer completely independent images. YMMV- Choose quality settings strategically: Lower settings may be sufficient for simpler compositions, or as a guide to what higher levels will creaite. Complex scenes with text or precise action benefit substantially from higher settings.

- Expect variability: Even at highest quality, certain elements may still require multiple generations to achieve desired results.

- Prompt complexity matters: The performance gap between quality settings widens as prompt complexity increases.

What’s Next?

Would you be interested in seeing a regular sample of image generations using precisely the same prompts to monitor generation stability over time? This could provide valuable insights into how the model evolves with updates and fine-tuning.

I’m also considering expanding this analysis to include more specialized prompts targeting specific capabilities like architectural rendering, facial expressions, or complex lighting scenarios.

Let me know by twitter DM which aspects of image generation you’d most like to see evaluated in future comparisons!